DesktopNow & Java Virtual Machines (JVM’s)

*This post originally appeared on the AppSense blog prior to the rebrand in January 2017, when AppSense, LANDESK, Shavlik, Wavelink, and HEAT Software merged under the new name Ivanti.

Last time I started you off with a nice easy blog around troubleshooting. Now it’s time to venture deeper down the rabbit hole.

Let’s take a look at how our DesktopNow suite interacts with Java applications.

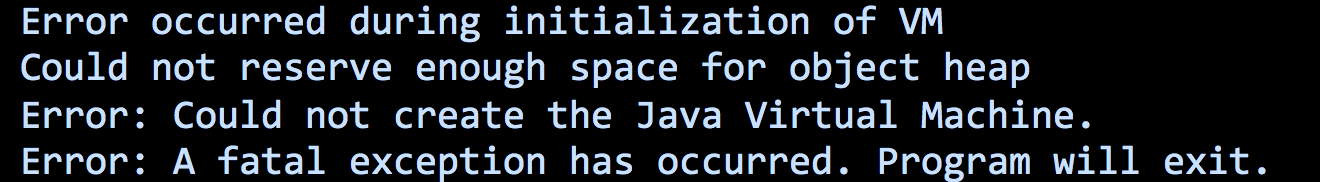

We have seen a trend of issues reporting that Java applications will no longer launch when either AM or EM has been upgraded. So I think it’s worth delving a little deeper into and explain what's occurring.

Before I start to explore the issues in technical detail, first I should explain at a high-level how Java environments are created. Most people who are admins have heard of the phrase Java Virtual Machine or JVM, but I bet most haven’t stopped to think, "Why on earth is it called that?" Well, It's because Java actually does run in the same principle as any other virtual machine, at least in memory reservation. Resources need to be assigned or reserved before the machine is started. This means that the memory ‘heap’ is first reserved, a small proportion is allocated, and then the ‘machine’ is started. Now those of you who regularly use Java applications in your environment will know that Java can be ran with a number of switches, particularly:

Command line options: -Xms:<min size> -Xmx:<max size>

The -Xmx switch is the one we need to focus on as it sets the maximum heap size for the JVM.

| -Xmx | |

| This option sets the maximum Java heap size. The Java heap (the “heap”) is the part of the memory where blocks of memory are allocated to objects and freed during garbage collection. Depending upon the kind of operating system you are running the maximum value you can set for the Java heap can vary. | |

| -Xms | |

| The -Xms option sets the initial and minimum Java heap size. The Java heap (the “heap”) is the part of the memory where blocks of memory are allocated to objects and freed during garbage collection.Note: -Xms does not limit the total amount of memory that the JVM can use. |

Java will try to allocate a single contiguous block of memory upon each launch (although opinion seems to differ, seeming dependent on which JVM specification is used). When the –Xmx switch is used, you are effectively specifying the maximum amount of memory and therefore the size of the block that requires reserving. Ideally applications should be profiled to understand their memory requirements.

Generally these types of issue occur more frequently when using a 32-bit JVM. Primarily this is due to the limited amount of heap memory available within this architecture. The OS defaults to 2GB process or ‘User’ address space and the other 2GB for ‘Kernel’ or system processes, however this can be changed. 64-bit processes do not have such architectural limitation as the address spaces are chasmic in comparison at 8TB (Terabytes) for both User and Kernel address space. This is increased again to 128TB in Windows 8.1 and Server 2012 R2.

Memory Limits for Windows and Windows Server Releases

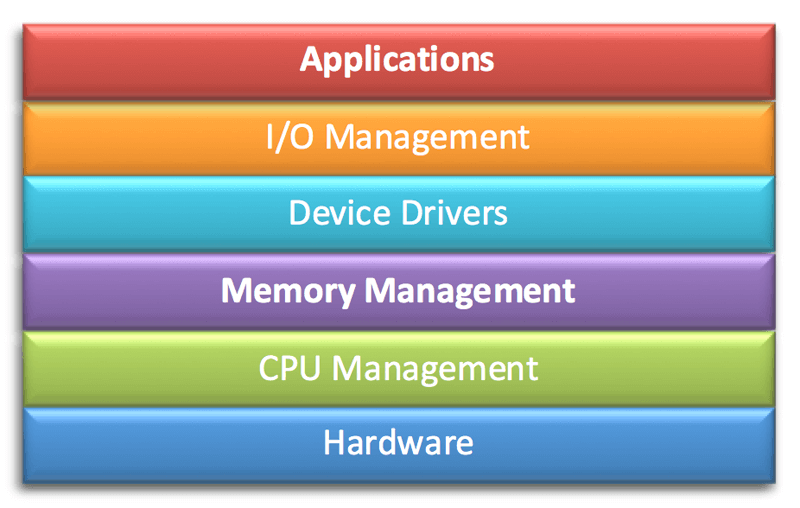

It’s easy to forget when talking about applications and their interactions that this is in the highest level of the OS stack. In this case, the issue emanates from a lot lower down in the stack. We are talking about how memory is allocated across the entire system, not just for applications. Therefore knowledge at this lower level is required for a true understanding of the issue.

With the issues we have seen here, the primary reason is that the AM, EM and related Microsoft libraries (DLL’s) are loading into an empty memory before Java is loaded. This fragments the free memory space available to Java when its starts.

Known Issues

Technically there are 3 distinct issues caused all in principle by the same root cause outlined above.

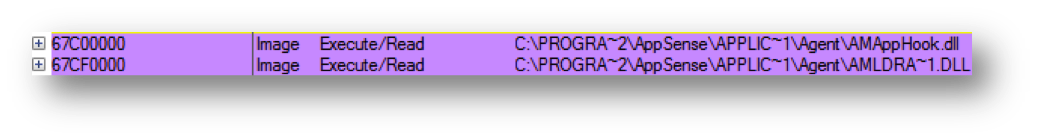

- Application Manager - AMAppHook.dll & AmLdrAppInit.dll are loaded into a static shared memory address, 0x67C00000 & 0x67CF0000 respectively. Technically this is in no way incorrect, however it causes a fragmented memory region.

- With the arrival EM 8.5 and AM 8.8 we introduced a new version of Microsoft Detours which forced allocations into an earlier memory address space causing more fragmentation of available free space than in previous versions.

The Detours library enables interception of function calls with user-defined calls. The first few instructions of the target function with an unconditional jump to the user-provided detour function. Instructions from the target function are preserved in a trampoline function. The trampoline consists of the instructions removed from the target function and an unconditional branch to the remainder of the target function.

In layman's terms, Detours allows you to insert your own functional calls to replace or extend the target function. The original target functions code is placed in a function called a ‘trampoline’, which can be called after the newly defined user-provided function.

The trampoline function is the key, as with any other object, as it requires a memory allocation. Detours use a complex algorithm to allocate this function's memory. The algorithm was seen to be allocating memory 1GB above or below the target library when the issue occurred. When the Java application failed, this allocation was in the middle of the memory block that the JVM was attempting to allocate and so in turn failed its memory allocation.

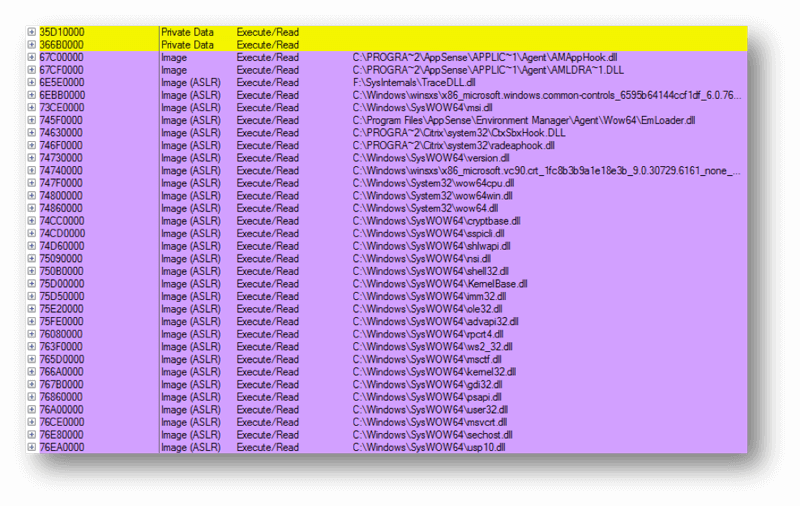

- Detours uses a start address that needs to be below where the system DLL’s are located. Using a top-down allocation approach it works down through the memory ranges until a large enough free-block is found. In version 2.1 the address space is high up in the memory spectrum at 0x70000000. Beyond this range the ‘system DLL’s are loaded. As Operating Systems are becoming increasing more complex the number of system DLL’s is increasing, needing a larger proportion of reserved address space. In version 3.0 of Detours Microsoft changed the starting address space further down the spectrum at 0x50000000 which now coincidentally aligns with the ASLR (Address Space Layout Randomization) address range. Any trampoline functions are loaded in below this function, again increasing the likelihood of memory fragmentation problems under certain circumstances.

Applied Optimizations

- Code optimization will reduce the occurrences for these types of issues. In Application Manager 8.9 Service Pack 1 the Application Manager modules have been ASLR enabled.

This will randomize the memory allocation, between 0x50000000 & 0x78000000 upon each system boot. Now for those of you paying attention you might be saying that ‘Hang on, 0x50000000 is lower than 0x67C00000! So the issue will still occur? ‘Correct, well half-way at least. The ASLR implementation means that each allocation is completed top-down, so the address ranges at the very top of the memory spectrum are more than likely ASLR enabled. Below is a representation of my workstations Memory heap, from this you can see that from 0x76EA0000 > 0x6E5E0000 each ‘Image’ has been tagged with an ASLR flag.

Further optimization in the form of a delay configurable via the Console > ‘Custom Settings > AppHookDelayLoad’ have been added into this release to delay the Application Manager Hook. This will to allow Java to allocate it's memory before Application Manager or Microsoft Detours reducing the likelihood of a fragmented memory region.

- Working with Microsoft it was validated that this behaviour was due to a change in the Detours source code. A solution has been implemented to optimize the code base for 32-bit versions in

- There are currently no optimizations in place to reduce occurrences of these type of issues; however I would stress that there have been no reported cases attributed to it.

Summary

To sum up, under normal working conditions the Operating System can handle fragmented memory space well and without any performance impact. Java specifications can differ, but 32-bit applications created using a specification that architecturally requires a contiguous reservation will more than likely meet this issue one day or another. Unfortunately for application vendors such as AppSense, there is no quick fix or win to help our customers. All we can attempt to do is optimize our components as efficiently as possible to reduce occurrences and maximize the available memory for these applications.

![Blog_Banners_main-page[1]](https://static.ivanti.com/sites/marketing/media/images/blog/2015/02/blog_banners_main-page1.png)